FairWork: A Generic Framework For Evaluating Fairness In LLM-Based Job Recommender System

Dual-perspective, dual-granularity fairness evaluation for LLM-based job recommendation: we assess bias from both the user and the recruiter sides, at individual and group levels.

Why FairWork #

Large Language Models (LLMs) enable highly personalized recommendations, but their inherent biases can harm fairness in job recommendation, affecting users and platforms alike. While prior work often covers limited dimensions, FairWork provides a comprehensive view.

What We Built #

Our framework addresses fairness evaluation through multiple dimensions:

- Two perspectives: fairness for users and recruiters

- Two granularities: individual-level and group-level evaluation

- Realistic inputs: stakeholders upload profiles and job descriptions; we align candidate qualifications with job requirements

- Actionable metrics & analysis: sensitivity to custom attributes and their intersections; quantitative fairness metrics (SPD, EO, PPV) and background analysis

Method & System #

1. Framework Overview #

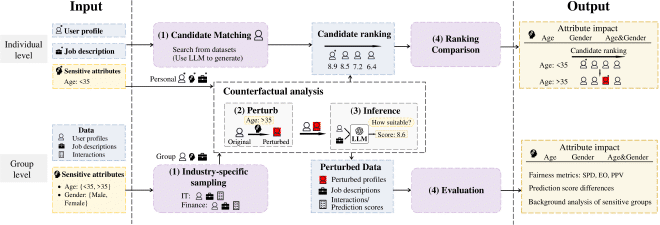

Counterfactual perturbation. We inject alternative values for sensitive attributes to build a perturbed profile $x’_u=\text{Perturb}(x_u, C_u)$, then query the same LLM prompt for original and perturbed profiles to obtain prediction scores and compare outcomes across settings.

2. Individual-level Pipeline #

Individual Analysis- Inputs: one user profile + job description

- Candidate Matching: Adopt TF-IDF to retrieve resumes similar to the user’s profile; if insufficient, synthetic profiles are generated by the LLM to ensure adequate comparison candidates

- Ranking Comparison: Analyze rank changes of the original candidate among perturbed profiles to quantify fairness deviations

3. Group-level Pipeline #

Group Analysis- Inputs: dataset + specified sensitive attributes

- Industry-specific Sampling: Aggregate jobs by industry, then sample representative job postings to match with user profiles

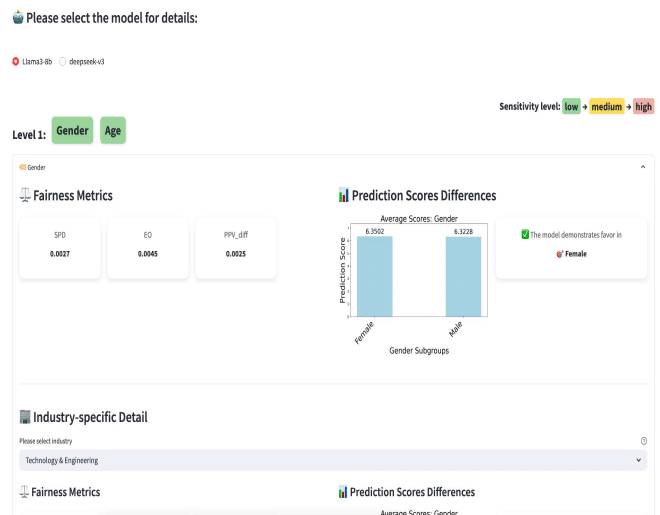

- Evaluation: Evaluate system-wide fairness with standard metrics (SPD, EO, PPV diff), sensitivity scores (Pred diff), and detailed background analysis (educational & professional distribution)

- We also employ an adaptive injection strategy to keep diversity while controlling compute when intersections grow combinatorially

Data & Settings #

Demo case study: sample 150 users and 5 jobs/user; evaluate Age and Gender on Deepseek-v3 and Llama3-8b; deterministic decoding with temperature=0.

Representative Findings #

Our comprehensive evaluation revealed several important patterns:

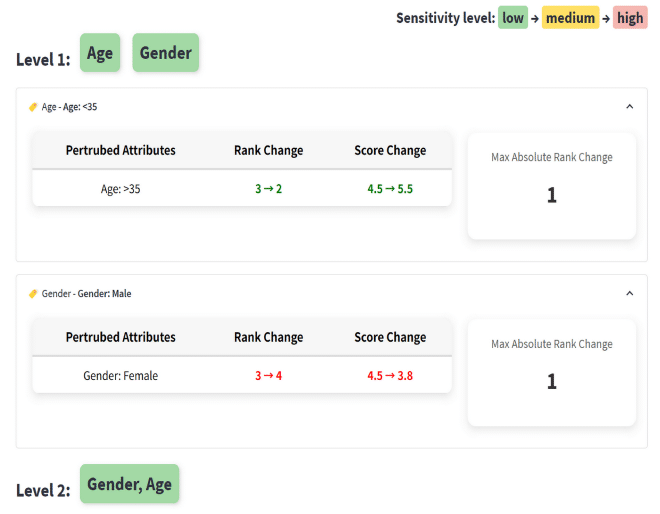

Intersectional Effects #

- Age & Gender combined yields larger bias than either attribute alone

- Intersectional bias patterns vary significantly across different industries

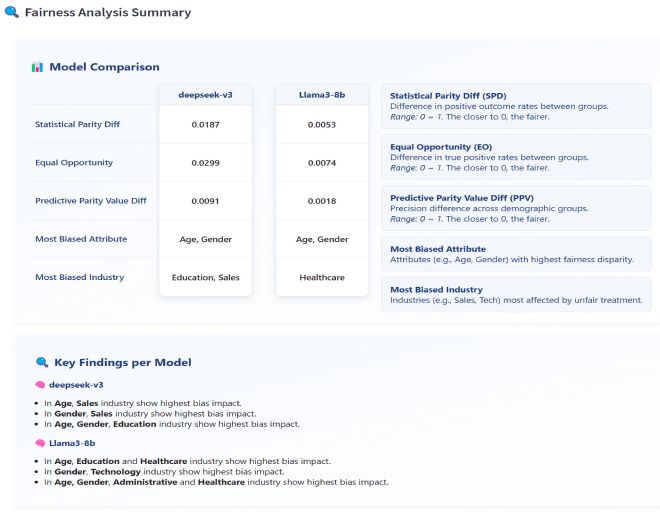

Model Differences #

- Deepseek-v3 shows larger group-level gaps:

- SPD: 0.0187

- EO: 0.0299

- Llama3-8b demonstrates more controlled bias:

- SPD: 0.0053

- EO: 0.0074

Industry-Specific Patterns #

- Deepseek-v3: Bias peaks in Sales (Age/Gender) and Education (Age&Gender)

- Llama3-8b:

- Age bias notable in Education/Healthcare

- Gender bias in Technology

- Age&Gender bias in Administrative/Healthcare

How to Use #

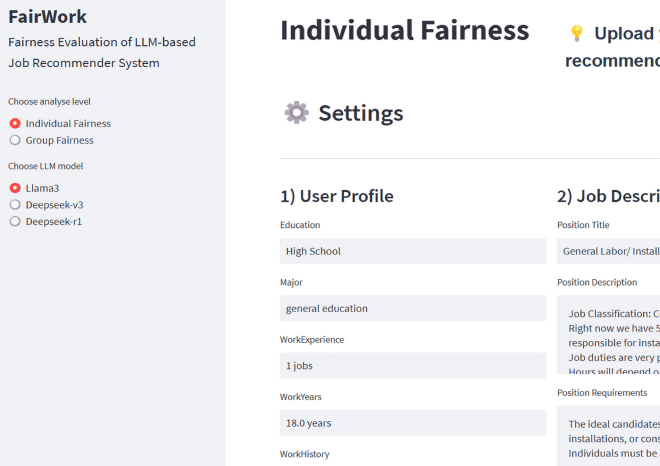

Individual-level Evaluation #

- Upload one user profile and one job description

- Optionally set your sensitive-attribute values

- The system retrieves similar candidates (TF-IDF; synthesizes profiles if needed)

- Perturbs attributes and compares rankings

Group-level Evaluation #

- Upload a dataset (profiles + JDs + optional interaction labels)

- Choose sensitive attributes

- The system performs industry-aware sampling

- Runs metrics for user/recruiter perspectives

Citation #

Reference (SIGIR ‘25, Padua, Italy):

Yuhan Hu, Ziyu Lyu, Lu Bai, and Lixin Cui. 2025. FairWork: A Generic Framework For Evaluating Fairness In LLM-Based Job Recommender System. In Proceedings of the 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ‘25), July 13–18, 2025, Padua, Italy. ACM, New York, NY, USA, 5 pages. https://doi.org/10.1145/3726302.3730145

BibTeX

@inproceedings{Hu2025FairWork,

title = {FairWork: A Generic Framework For Evaluating Fairness In LLM-Based Job Recommender System},

author = {Yuhan Hu and Ziyu Lyu and Lu Bai and Lixin Cui},

booktitle = {Proceedings of the 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR '25)},

year = {2025},

address = {Padua, Italy},

doi = {10.1145/3726302.3730145}

}